· 7 min read

What Is and Is Not a LawGPT?

Optimizing performance in the legal domain

LawGPT - Improving the Performance of LLMs in Specialty Domains

This week kicked off with the annual LegalWeek in NYC. The event draws a large number of customers and vendors to the Hilton in Midtown. Generative AI was certainly this year’s topic du jour with most if not all vendors purporting to release some sort of GenAI offering. In observing some of the dialog on Generative AI, there was clear confusion about the techniques being used and the actual ‘value add’ of the offering being made available to the user. Some of this was quite innocent and of course some of this confusion is created by vendors seeking to obfuscate the fact that they are asking for a massive premium to offer very little above and beyond the base capabilities of leading LLMs.

While ultimately for any technology tool, the proof is in the pudding – some of the distinctions discussed below are important in one sense that it is useful to be an informed consumer of any offering that one is considering purchasing. How robust is the offering? How much markup am I paying? How dependent is a given vendor on a particular LLM model? Does the vendor actually own the underlying model or other IP they are using, etc?

So as a primer, let’s walk through the approaches being undertaken in the respective products and the distinction between using engineering techniques to create better performance in the legal domain and an actual LegalGPT model trained from first principles.

Engineering Techniques to Improve the Performance of Foundation Models within Specific Domains

Many of the Large Language Models which have been developed to date are general-purpose models - LLMs that have been trained on a vast amount of data that covers a wide range of topics. You’ve likely heard of the big players - OpenAI’s GPT-4, Meta’s Llama 2, or Google’s Gemini. These General Foundation Models can undertake a wide range of tasks, doing everything from creating a Shakespearean sonnet to crafting a lasagna recipe to taking the bar exam.

General Foundation Models - e.g., GPT-4, Claude 2, Llama 2, PaLM 2, and Gemini

General Foundation Models are both expensive and time-consuming to create. Thus, a General Foundation Model is ‘out of the box’ technology - its baseline capabilities and content are baked-in once it is rendered. It can be quite a challenge to solve shortcomings of these models given the core technological capabilities are already baked-in. However, there are a range of engineering techniques which can help improve the performance of general models on particular domain-specific tasks.

Augmentation Techniques for General Foundation Models

- Prompt Engineering

- Retrieval Augmented Generation

- Fine Tuning with Relevant Data

The purpose of these and other engineering techniques is to elicit high-quality responses for problems specific to particular domains or industries. In prior posts, I highlighted several of these techniques including Prompt Engineering and Retrieval Augmented Generation (RAG).

You can think of these approaches as lying on a continuum, with fine-tuning representing a more complex approach to plugging holes in the baseline foundation model. We can think of fine-tuning an LLM as providing it with extra resources to specialize in a particular industry. Much like professional education (law school, medical school, business school) is designed to provide students with an information diet of specialized concepts and vocabulary within a given domain, so too a model can benefit from such an information diet.

In fine-tuning, a base LLM is typically given additional training on a dataset within the domain so that it can become familiar with the nuances and specific requirements of that field. Through this training, the model’s parameters adjust and adapt to specialize in tasks and language specific to the domain. Alternatively, RAG works by adding an external layer to the foundation LLM. Taking a users’ inputs and searching through a large corpus of text that is external to the LLM, all relevant information is retrieved and then coupled with the original query and fed to the LLM. This process guides the LLM so that it is more likely to accurately utilize the original prompt.

Foundation Models Fine-Tuned for Specialized Domains - e.g., Med-PaLM 2

Med-PaLM 2 is an example of a foundation model which was subsequently fine-tuned from the medical domain. Namely, Med-PaLM 2 was not built from the ground up and thus was not trained solely or even primarily on medical data. Instead, the model is the product of taking Google’s generalized large language model PaLM (Pathways Language Model) and fine-tuning it by training it on medical literature and clinical case notes so that it is specialized in medical applications.

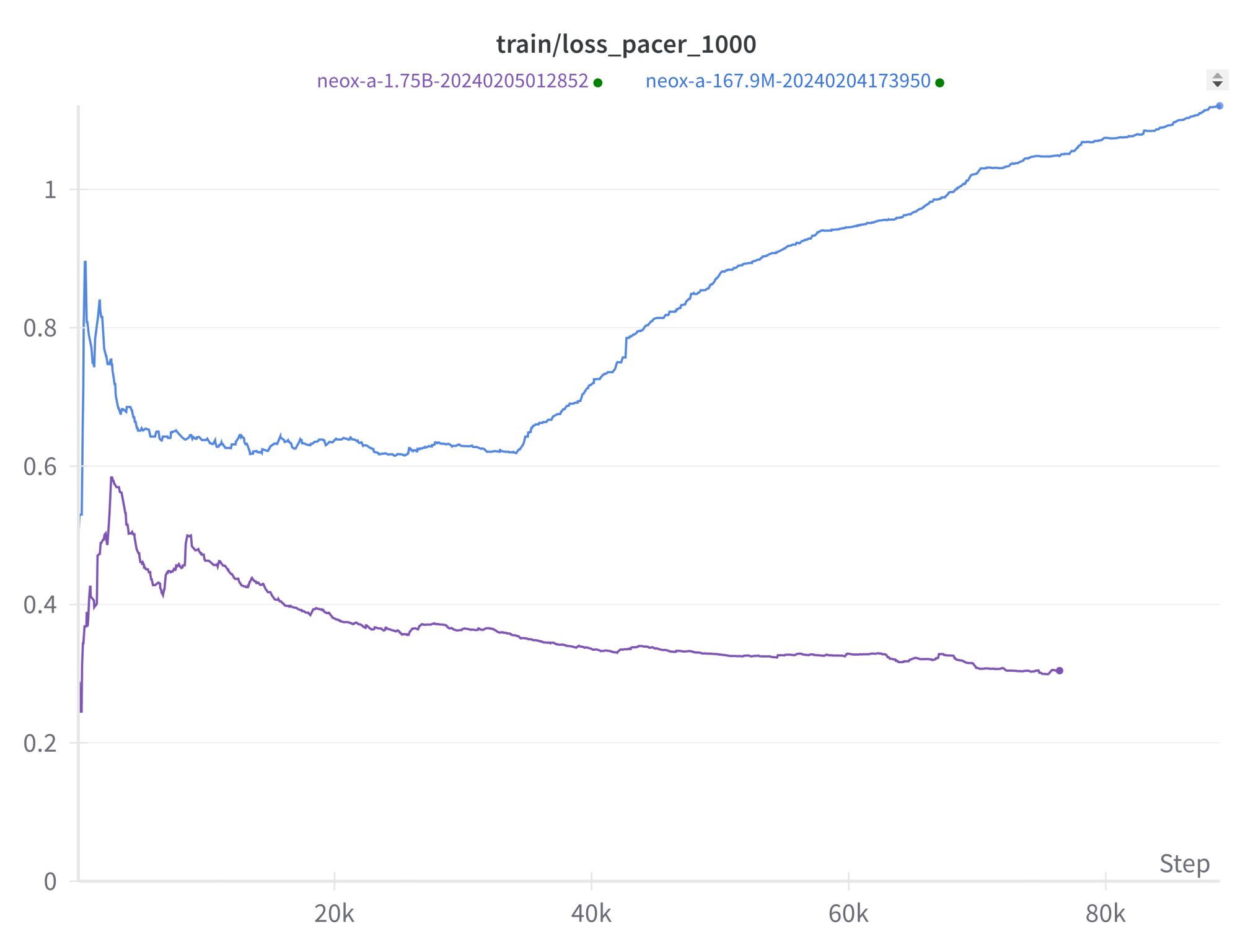

An Example of a Domain Model Built from First Principles - BloombergGPT

General-purpose models that have been modified for domain-specific use compare to domain-specific foundation large language models, models which are built from first principles and trained primarily on a narrow, field-specific set of data. These models are used to perform and support tasks within a particular industry, such as, healthcare, law, and finance. For example, BloombergGPT is an LLM that was trained on a mixture of general data (e.g. the Pile) combined with a large diet of financial data. We call this a domain-specific foundation model because it was created with the sole purpose of performing and supporting tasks within the domain of finance.

Domain-Specific Foundation Models Built from First Principles - e.g., BloombergGPT, Kelvin Legal GPT

The Case for a LawGPT – A Domain-Specific Model Built from the Ground Up

There is currently a debate in the field regarding whether a large general model combined with engineering techniques will outperform a domain-specific foundation model. As there have been very few domain-specific models trained from first principles, it is not possible to fully evaluate this proposition.

We believe that a legal-specific GPT-style model trained on a strong, high-quality diet of input data combined with a series of clever techniques will deliver strong performance on at least a subset of legal-specific tasks. We are currently building our Kelvin Legal GPT as we speak.

At first glance, it seems that all domain-specific approaches - whether trained from first principles or not - should be measured only against performance on tasks. However, particularly if performance is even roughly equivalent, there are a range of reasons to opt for domain-specific foundation models –

1. Cost Effectiveness. Domain-specific foundation models do not need nearly as many tokens or parameters to work well. Consequently, they are more cost effective to build, run and maintain / update.

2. Sustainability. Being smaller in size means less energy is required to train and run domain-specific foundation models. Less energy translates to fewer carbon emissions and a more sustainable option.

3. Security. Being small in size, domain-specific foundation models can be kept ‘on prem’ and ensure that security is a top priority for any organization.

4. Copyright Infringement. Engineers can know and control the input data of a model that is built from the ground up. Not only can input data be curated so that it is high-quality content, engineers can ensure that the training data does not violate copyright laws.

Jessica Mefford Katz, PhD

Jessica is a Co-Founding Partner and a Vice President at 273 Ventures.

Jessica holds a Ph.D. in Analytic Philosophy and applies the formal logic and rigorous frameworks of the field to technology and data science. She is passionate about assisting teams and individuals in leveraging data and technology to more accurately inform decision-making.

Would you like to learn more about the AI-enabled future of legal work? Send your questions to Jessica by email or LinkedIn.