· 5 min read

Thoughts on Three Studies Analyzing the *Minimum* Utility of GPT-4 in Professional Services

The best is yet to come

Three Studies Analyzing the Minimum Utility of GPT-4 in Professional Services

To anyone who has used GPT-4 (particularly 32k+), it is clear that the capabilities of LLMs are likely to have significant utility in the world of professional services. While the precise scale and scope of that utility is subject to evaluation, the notion that LLMs will reshape the work of professionals appears evident.

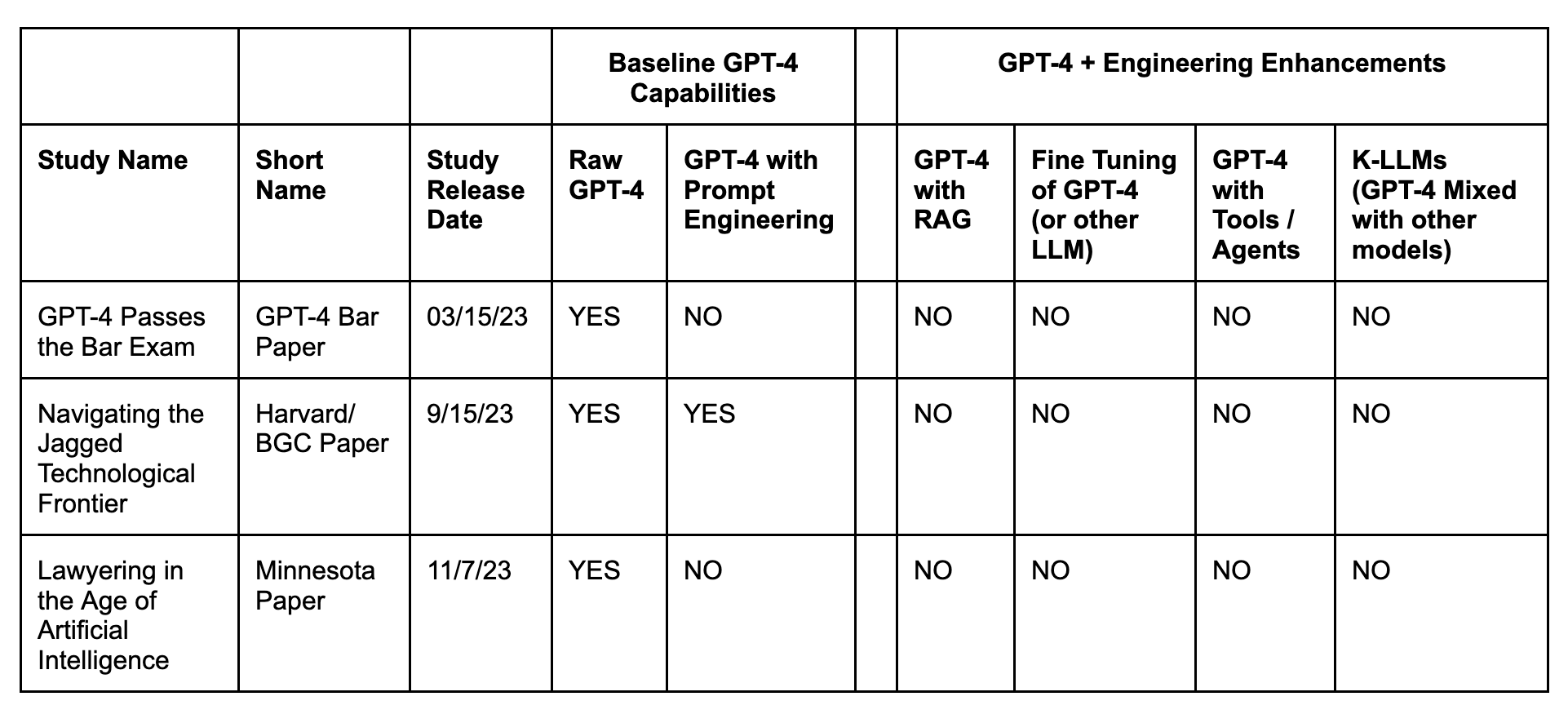

Three recent studies have attempted to measure the efficacy of GPT-4 in completing tasks and enhancing productivity. The first of these studies - GPT-4 Passes the Bar Exam - was released earlier this year. More recently, Harvard/BCG and the University of Minnesota have released working papers analyzing the utility of GPT-4 within the context of legal and professional services. In this post, I highlight these two studies and consider what, if anything, these studies might tell us about the path forward for Generative AI in legal services.

Harvard Business School / BCG Study on GPT-4 in Consulting

A working study conducted at Harvard Business School with the Boston Consulting Group (BCG), a global management consulting firm, explored the practicality of AI tools, specifically Large Language Models (LLMs) like GPT-4, for realistic knowledge work.

The researchers introduce the concept of a “jagged technological frontier,” where AI’s capabilities vary significantly across different tasks. Some tasks are easily accomplished by AI, while others, seemingly similar in difficulty, are beyond AI’s current capabilities. The study revealed that for tasks within AI’s capabilities, consultants using AI showed significantly higher productivity and quality in their outputs. Specifically, they completed 12.2% more tasks, 25.1% faster, and produced results of over 40% higher quality compared to a control group. However, for a task selected as being outside the frontier of AI’s capabilities, consultants using AI were 19 percentage points less likely to produce correct solutions than those not using AI. This highlights the critical importance of understanding AI’s limitations and strengths.

The study also observed two distinct patterns of AI usage: “Centaurs,” who strategically divide tasks between themselves and AI, and “Cyborgs,” who integrate AI into their workflow more completely. These findings underscore the evolving nature of AI’s impact on knowledge work and highlight the need for professionals to skillfully navigate their usage of such models.

Minnesota Study Analyzing GPT-4 Use By Law Students

Researchers at the University of Minnesota, examined how AI assistance impacts their legal analysis skills across various tasks. Participants - law students to be specific - were randomly assigned sixty students to complete four legal tasks each - draft a complaint, a contract, an employee handbook section, and a client memo - with or without GPT-4 assistance.

In an effort to ensure that the students were capable of “effectively” using GPT-4, the professors required the students to watch three brief pre-recorded videos on how to use GPT-4 and complete a handful of short questions. The results, blind-graded and timed, showed that GPT-4 modestly improved the quality of legal analysis but significantly increased task completion speed.

In regards to the quality of task completion, GPT-4 showed the greatest benefit to lower-skilled students. AI (at least in part) leveled the playing field for these students. However, among all students, AI increased the speed it takes to complete tasks.

GPT-4 Out-of-the-Box is Not Really the Point — LLMs + Real Engineering is the Future of Generative AI & Law

As a science-centric enterprise, we here on the Kelvin / 273 Team certainly think it useful to validate and quantify the utility of GPT-4 in various settings. However, it is important to situate these results in the broader industrial context. All three papers are ’lower bound’ results. In other words, they do not reflect the true nature of the capabilities here. As reflected in the table above, language models such as GPT-4 can be connected to other data, tools, engineering, and agents to produce much stronger results than in its raw form alone.

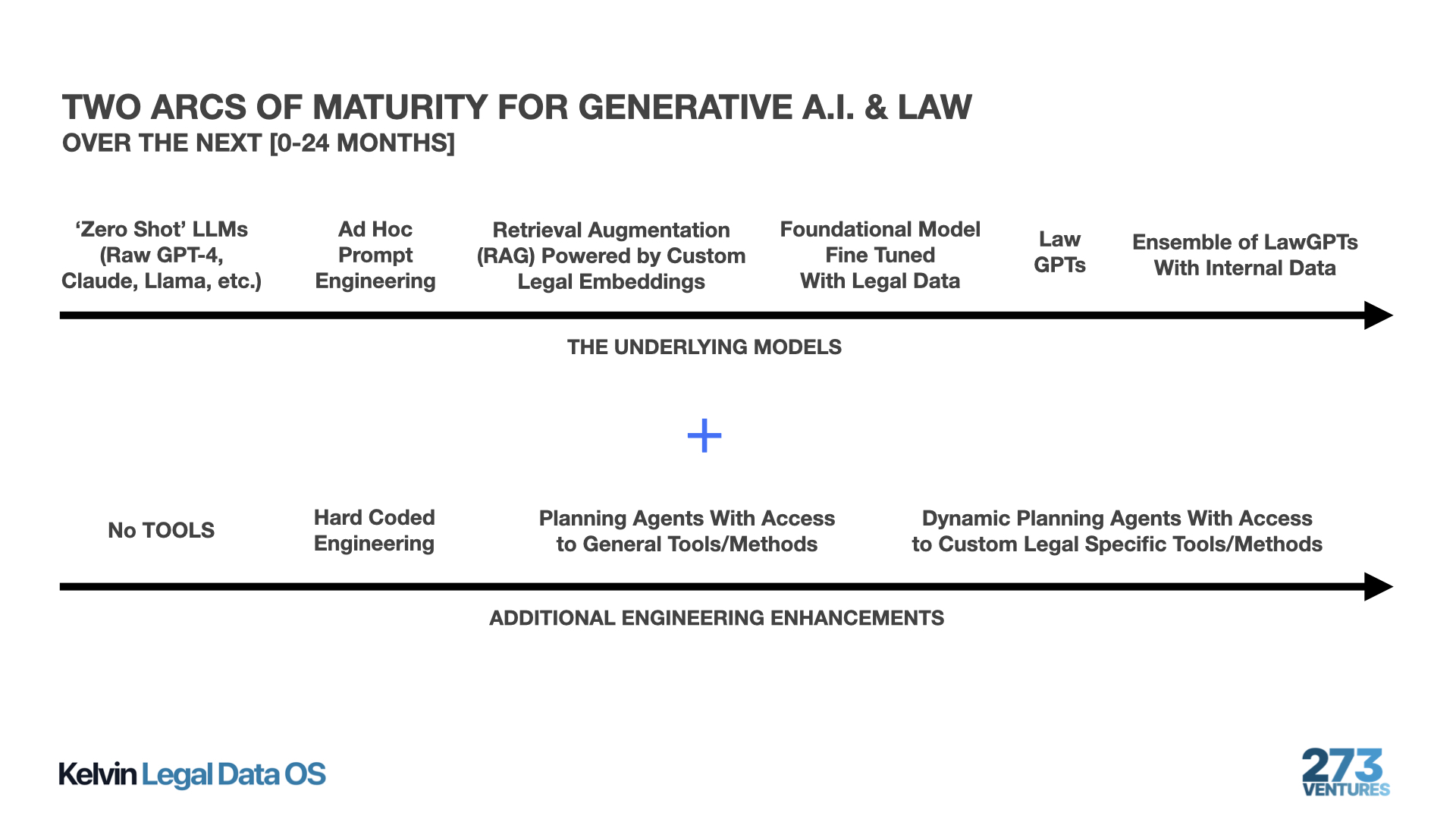

As highlighted (in part) at the recent Open AI Dev Day, the future of Generative AI broadly and Generative AI in law is not really about the out-of-the-box capabilities of LLMs but rather how to combine LLMs with a host of engineering techniques built on top of the base capabilities.

As discussed in our recent post, the Kelvin / 273 Team is working to reach the mountaintop - a world of legal specific foundational models (i.e., Law GPTs) which are connected to and combined with domain specific legal tools/methods through an orchestration layer (via legal agents). The following is a graphic sketch of two arcs of development for Generative AI & Law – from raw zero-shot capabilities of LLMs to much stronger results through combinations of data, tools, engineering, and agents. So while these studies do offer partial insight into the raw capabilities (and limitations) of foundational models, such as GPT-4, they are far from the last word on the issue.

Jessica Mefford Katz, PhD

Jessica is a Co-Founding Partner and a Vice President at 273 Ventures.

Jessica holds a Ph.D. in Analytic Philosophy and applies the formal logic and rigorous frameworks of the field to technology and data science. She is passionate about assisting teams and individuals in leveraging data and technology to more accurately inform decision-making.

Would you like to learn more about the AI-enabled future of legal work? Send your questions to Jessica by email or LinkedIn.