· 6 min read

31 Flavors (and Counting) of Kelvin Legal Embeddings

Kelvin Legal Embeddings Powered by the Kelvin Legal DataPack

What are Embeddings? A Brief Overview

Embeddings are the bridge between the human world of language and the machine world of numbers, allowing AI models to process and generate textual data. In our recent three-part series on embeddings, we gave a detailed explanation of what embeddings are and why they matter. Recall that computers inherently process numbers and if we are to use computers to process textual data - words, phrases, documents - then we have to find a way to convert textual data into a numerical format. Embeddings are essential to performing this task. Think of them as unique numerical codes which enable computers to comprehend/infer the meaning of words in particular given context.

Word embeddings are crafted through the use of neural networks, which are complex computational systems designed to mimic the human brain. Large language models (LLMs), such as GPT-4, Claude, Llama, etc, develop and refine these embeddings by processing vast amounts of textual data. Each word is fed into the neural network and converted into its unique, multi-dimensional numerical vector/embedding. As the model trains, it adjusts these embeddings so that words with similar meanings are positioned closer to each other in this multi-dimensional space. As discussed in the highly acclaimed Word2Vec paper, embeddings can help infer semantic concepts such as how the relationship “king” and “queen” is similar to the difference between “man” and “woman.”

The accuracy of these spatial representations is enhanced when the model encounters numerous instances of the same word used in various substantive contexts. When operating at a very large scale, such as with GPT-4 (which likely possesses over a trillion parameters), the model becomes adept at discerning even the finest nuances in word meanings. Ultimately, embeddings act as a foundational blueprint for words in LLMs. Well-trained embeddings capture the semantic meaning of words and allow models to grasp subtleties in meaning, detect synonyms, and even understand analogies.

Well-Trained Embeddings in Specific Domains: Key Components

Legal language is full of nuance and complexity. As discussed in a recent ACL paper, “the legal domain has rare vocabulary terms such as ‘Habeas Corpus’, that are rarely found in domain independent corpora. In addition, some common words imply a context specific meaning in the legal domain. For example the word ‘Corpus’ in ‘Habeas Corpus’ and ‘Text Corpus’ gives different meanings in the legal domain. This aspect is also not captured when training with general corpora. Also approaches that are specifically designed for the legal domain should be researched to address the inherent complexities of the domain. Considering all these facts, this paper discusses the designing and training of a legal domain specific sentence embedding based on criminal sentence corpora.”

While models built on large bodies of information (including mixtures of general english and legal english) can offer very solid performance on certain tasks, there are certain legal problems which can still prove challenging.

As highlighted in our prior post on embeddings, consider our M&A example from the Kelvin Docs Page. In this example, we combine the use of Kelvin Vector (our Vector Database) with Kelvin Embeddings to help support retrieval-augmentation in the context of a M&A diligence checklist. We review a series of hypothetical executive employment agreements and attempt to determine both the salary of the employee and their bonus plan (if applicable). Kelvin’s smallest and (in turn) fastest model, en-001-small, outperforms OpenAI’s text-embedding-ada-002 when evaluated on this M&A test case. OpenAI’s model returned a series of tax related results while Kelvin Embeddings returned the correct results for the problem. In the M&A example, OpenAI’s embeddings text-embedding-ada-002 are clearly not ideal for this task, as they fail to recall the relevant documents or text segments on this retrieval problem. The Kelvin Embeddings offered a more accurate, detailed and useful response than OpenAI embeddings.

We can glean an important lesson from this example. Megascale models such as those created by OpenAI do not always produce superior results. Even though OpenAI’s embeddings text-embedding-ada-002 were trained on a significantly larger dataset, they did not outperform Kelvin’s smaller embedding model en-001-small. Many foundational models have an inconsistent diet of input information including not only high fidelity sources but also content such as Reddit. Much like an athlete would benefit from a diet of lean proteins (e.g. high quality legal materials) as opposed to potato chips (e.g. certain corners of the internet), it is also the case that the quality input materials can materially impact the performance of language models. Rather than be fed an enormous information diet that covers every conceivable topic - from cooking to news to computer science to Reddit, Kelvin’s Embeddings have been trained on a large but focused dataset of legal, regulatory and financial documents. As a result of this strong and steady diet of relevant legal information, Kelvin Embeddings are equipped to effectively and accurately represent the meaning of legal-specific terminology and tokens.

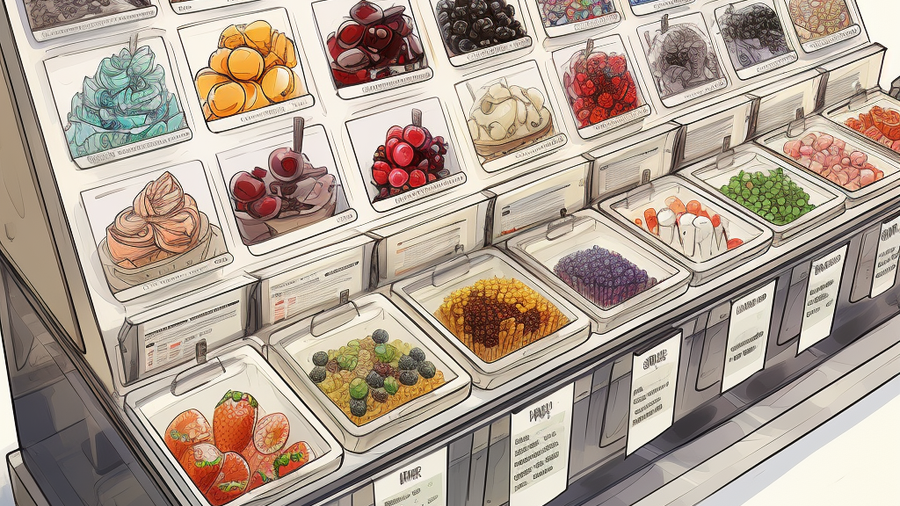

Introducing Kelvin Legal Embeddings: 31 Flavors (and Counting)

The example above uses Kelvin embedding en-001-small which is an embedding model trained on the overall Kelvin Legal Data Pack. The Kelvin Legal DataPack is an extensive dataset of over 200+ billion law tokens derived from legal, regulatory and financial texts from many jurisdictions. It has clear provenance from an IP perspective and can be used to support several different points within the Gen A.I. maturity continuum. For example, one use for the Kelvin Legal DataPack is to create a series of custom embedding models. To ensure that our embeddings are well-trained, we have created different embeddings for different problems in law. In fact, like a well-known ice cream franchise, we are currently offering 31 flavors of embeddings. These include Contract Embedding, Laws & Rules Embedding - US, UK, EU, or combined - and General Legal and Financial Embeddings and many, many others. The Kelvin Legal Data OS features the tools to make custom embeddings (including those which combine your data with data in our Kelvin Legal Datapack). Among other things, our 31 Flavors of Kelvin Embeddings support retrieval augmented generation (RAG) by allowing retrieval systems to offer more nuanced and context-sensitive results and thus improving the efficiency and accuracy of RAG.

Jessica Mefford Katz, PhD

Jessica is a Co-Founding Partner and a Vice President at 273 Ventures.

Jessica holds a Ph.D. in Analytic Philosophy and applies the formal logic and rigorous frameworks of the field to technology and data science. She is passionate about assisting teams and individuals in leveraging data and technology to more accurately inform decision-making.

Would you like to learn more about the AI-enabled future of legal work? Send your questions to Jessica by email or LinkedIn.